A Mighty Banana: Gemini 2.5 Flash Image Editing

Google's new flagship image editing model 'nano-banana' (Gemini 2.5 Flash) dominates literally everything else.

Just hours ago, Google finally released their new flagship image editing model, code-named ‘nano-banana’. This model, while anonymous, had taken the world by storm. So, now that DeepMind has officially claimed it, how good is it really?

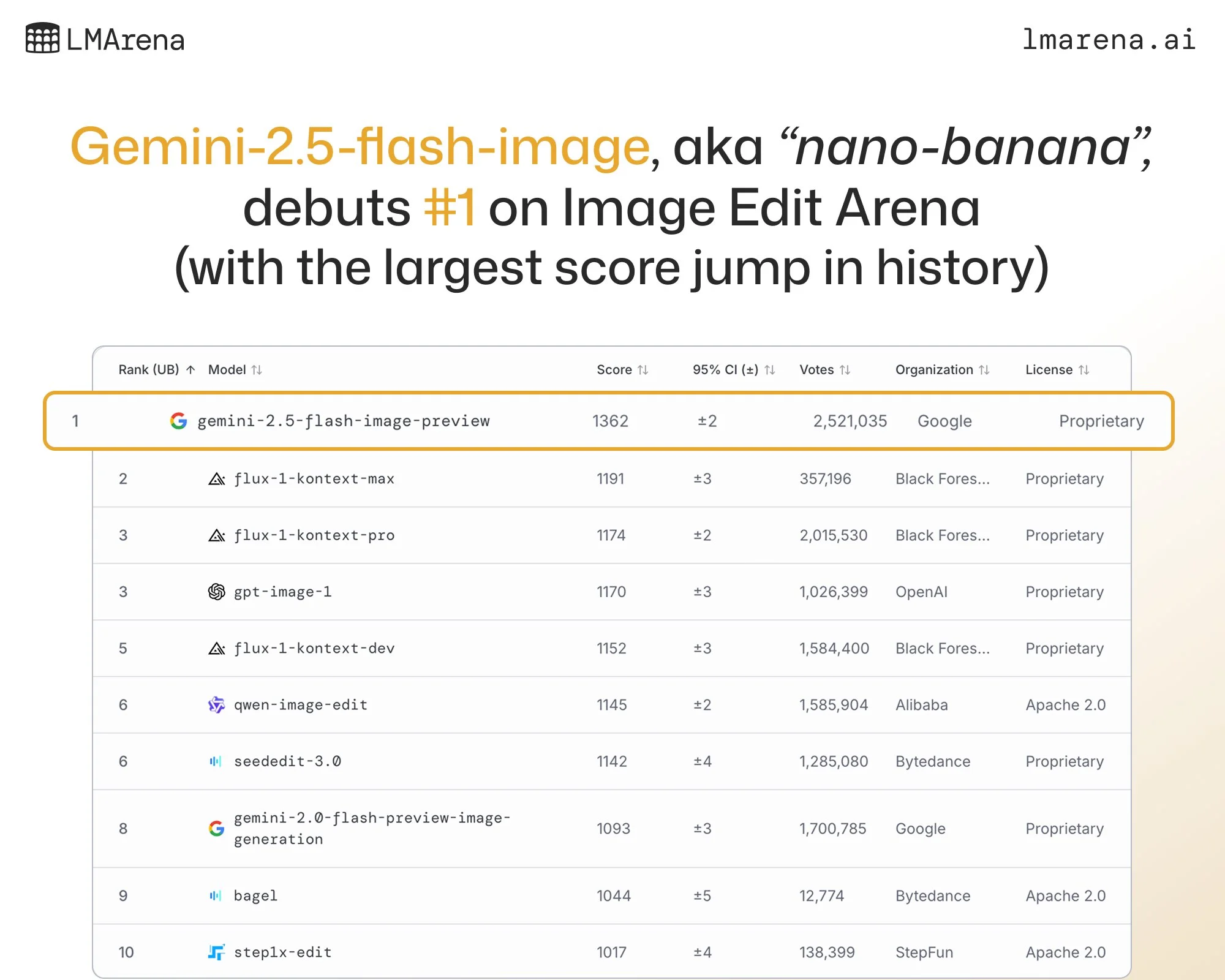

LMArena Dominance

Well, its LMArena ranking really tells you everything you could possibly need to know. If you’re unfamiliar with LMArena, it’s one of the most trusted benchmarks for AI nowadays. Essentially, you send a prompt, and two anonymous AIs answer you. Then, you have to pick which one you think is better. This measures something really important, which is end-user satisfaction.

For image models, LMArena (and similar battles such as those hosted by Artificial Analysis) really is the only benchmark, since there’s no rigorous way to measure how good an image generation was. Of course, it took #1 on the arena. But can you guess by how many ELO points? Maybe 30? 50?

Well, here’s the answer:

The Stunning Gap

Click to reveal the ELO gap…

Holy s***! A 170 point gap to the second place model. The gap between the new Gemini model and 2nd place is around the same as the gap from 2nd place all the way down to 10th. For some perspective, 170 points down from the top text model gets you all the way down to the 20s, with old models and even mini models like GPT-5 mini.

How to Use This Model

So, how can you use this model? Well, there are a few options:

- For Normal Users: Available in AI Studio or through the Gemini app

- Developer Access:

- Google’s API or OpenRouter at an estimated $0.039 per image

- fal.ai inference platform at $0.04 per image

Advantages and Examples

Character and Item Consistency

The biggest advantage of this Gemini 2.5 Flash Image Editing model is the character and item consistency when combining multiple images or making changes. Instead of looking like a vaguely similar person or item, it will be almost identical.

Here’s the example fal.ai loads, which is frankly incredible:

When asking the AI to combine these two pictures:

It generates this final output:

Click to see the result…

Wow! That’s incredible.

Native Reasoning Capabilities

It also seems to have some reasoning capabilities native to the model, which can be very important.

Given this image of a balloon and a cactus:

It can predict that the balloon will explode on the cactus:

Click to see Gemini 2.5 Flash result…

For reference, this is what Flux Kontext, the second best image editing model does with the same prompt:

Click to see Flux Kontext result…

That’s pretty standard for these image models - with really clear instructions, they shine, but they don’t really understand the world. And that’s what’s really powerful about Gemini 2.5 Flash Image Editing and will certainly be important for many AI applications to come.

Pricing Comparison

Overall, the ability of the model is frankly incredible. The pricing is also quite appealing. For reference, here’s a pricing table:

| Model | Price per Image |

|---|---|

| Gemini 2.5 Flash/Nano-Banana | $0.04 |

| Flux Kontext Max | $0.08 |

| Flux Kontext Pro | $0.04 |

| Qwen Image Edit | $0.03 |

| GPT Editing | $0.04 - $0.17 |

How to Actually Use Gemini 2.5 Flash

Here’s what I’ve learned after testing this model extensively. Gemini 2.5 Flash understands physics. As you can see through the cactus example above, this is one important part of its amazing results. Something beautiful about the model is that it works with both low-quality and high-quality prompts. A simple prompt will still get the result you wanted, but if you’re extremely clear about what you want, the model will follow your instructions with a precision that’s unmatched.

Real-World Applications

Several examples for potential startups/applications:

For Product photography for ads. You can take a basic product shot and seamlessly place it in different environments - beach, office, kitchen counter - and it maintains perfect lighting and product consistency. The speed and cost (a few seconds and cents per image) means you can generate dozens or hundreds of variations for A/B testing before recreating the best option in a professional studio.

For content creators, this is a game-changer. YouTubers can generate custom thumbnails that actually make sense. Instagram influencers can edit themselves into exotic locations, and it generally looks real.

The e-commerce integration potential is insane. Imagine customers uploading a photo of their room and seeing their furniture from the store placed in it, with proper scale. Combined with models like Genie 3, they could even walk around the room and look at it. Many apps already exist for fashion sites showing how clothes would look on your body. However, this model is so much better, that it raises the question of whether the specialized startups are even necessary since its outputs from simple prompts already look professionally shot.

One weird trick: you can use its editing capabilities for fixing AI-generated images from other models.

Final Thoughts

Not only have we just seen by far the best image editing model of all time, it is also priced incredibly affordably. That’s not to say that this will replace Photoshop - far from it, in fact. It still struggles at extremely high precision pixel level edits and consistency.

But for amateur use, or applications in AI-based image apps, this has unbelievable potential. I’ll leave this here so you can go check it out on your own, but I guarantee you will be blown away, even if you’ve tried every other image editing model to date.

Disclaimer

The information in this article is accurate to the author's best knowledge as of August 26, 2025. The views and opinions expressed in this article are those of the author alone and do not necessarily reflect the official policy or position of any organization. This content is provided for informational purposes only and should not be construed as professional advice. While we strive for accuracy, we make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, or suitability of the information contained herein. Any reliance you place on such information is strictly at your own risk.

Comments